Cross-Entropy

Cross-entropy measures the average number of bits required to identify an event if you had a coding scheme optimised for one probability distribution , where the true probability distribution was actually .

It's the same as Information Entropy (Information Theory) but measuring what happens if you have are identifying messages using a different probability distribution.

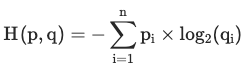

Expressed as:

References

Wikipedia. Cross entropy. Wikipedia, July 2021. URL: https://en.wikipedia.org/wiki/Cross_entropy. ↩