Categorical Cross-Entropy Loss

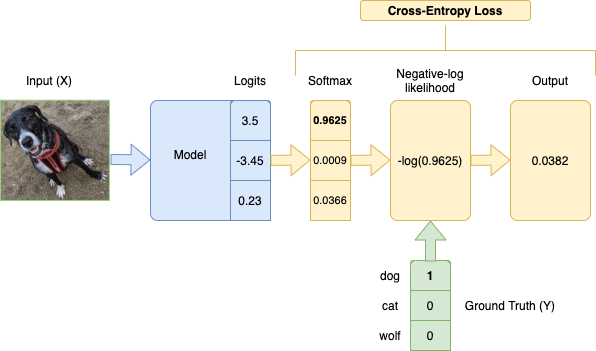

Categorical Cross-Entropy Loss Function, also known as Softmax Loss, is a Loss Function used in multiclass classification model training. It applies the Softmax Function to a model's output (logits) before applying the Negative Log-Likelihood function.

Lower loss means closer to the ground truth.

In math, expressed as

P=softmax(O)

−∑Ni=1Yi×log(Pi)

where N is the number of classes, Y is the ground truth labels, and O is the model outputs. Since Y is one-hot encoded, the labels that don't correspond to the ground truth will be multiplied by 0, so we effectively take the log of only the prediction for the true label.

In the PyTorch implementation, the index of the ground truth label is passed instead of one-hot encoded Y vector.

import torch

from torch import nn, tensor

torch.set_printoptions(sci_mode=False)

dog_class_index = 0

label = tensor([dog_class_index])

logits = tensor([[3.5, -3.45, 0.23]])

nn.CrossEntropyLoss()(logits, label)

tensor(0.0382)

We can also achieve the same result by manually calling softmax and negative-log loss.

softmax_probs = nn.Softmax(dim=1)(logits)

softmax_probs

tensor([[ 0.9625, 0.0009, 0.0366]])

-torch.log(softmax_probs[:,label])

tensor([[0.0382]])

Based on Cross-Entropy in Information Theory, which is a measure of difference between 2 probability distributions.

[@howardDeepLearningCoders2020] (pg. 222-231)