NoProp: Training Neural Networks Without Back-Propagation or Forward-Propagation

an alternative training method to backprop that does local layer learning

an alternative training method to backprop that does local layer learning

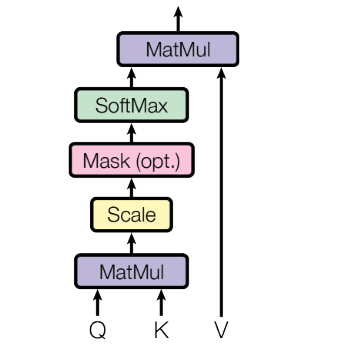

a method of computing a token representation that includes the context of surrounding tokens.

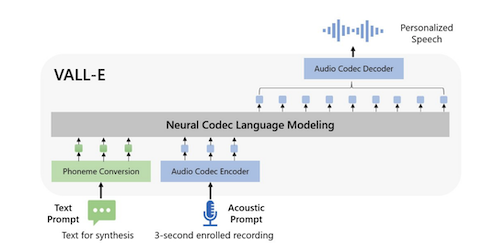

VALL-E can generate speech in anyone's voice with only a 3-second sample of the speaker and some text

An activation function for modelling data with periodicity (repeating patterns)

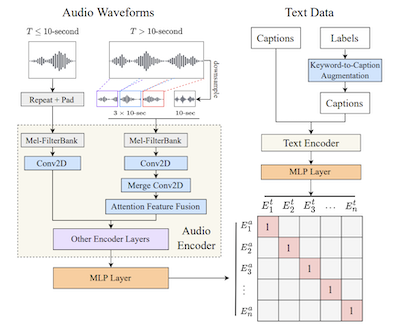

Notes from paper Large-scale Contrastive Language-Audio Pre-training with Feature Fusion and Keyword-to-Caption Augmentation by Yusong Wu, Ke Chen, Tianyu Zhang, Yuchen Hui, Taylor Berg-Kirkpatrick, Shlomo Dubnov