Mixture-of-Recursions: Learning Dynamic Recursive Depths for Adaptive Token-Level Computation

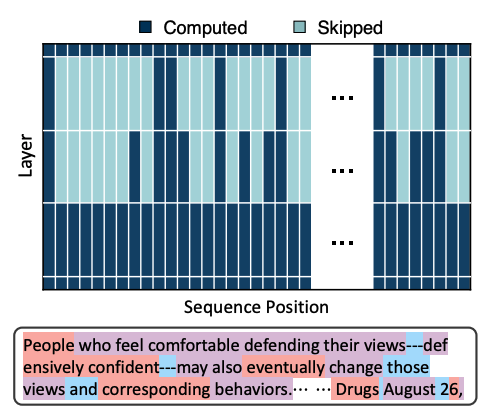

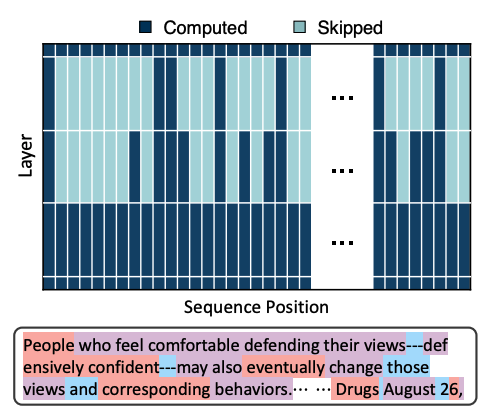

Optimising computation at the token-level

Optimising computation at the token-level

learn to reason without any human-annotated data.

Routes LLM tasks to cheaper or more powerful models based on task novelty.

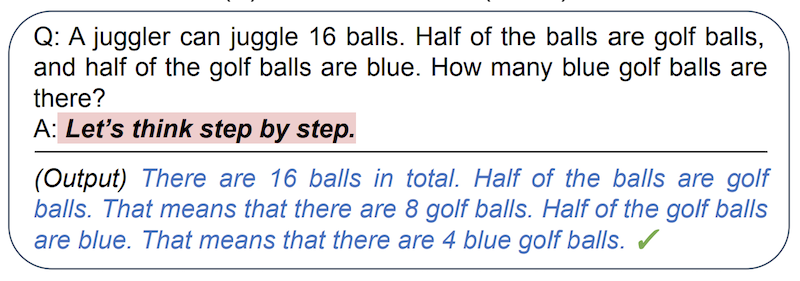

improve zero-shot prompt performance of LLMs by adding “Let’s think step by step” before each answer

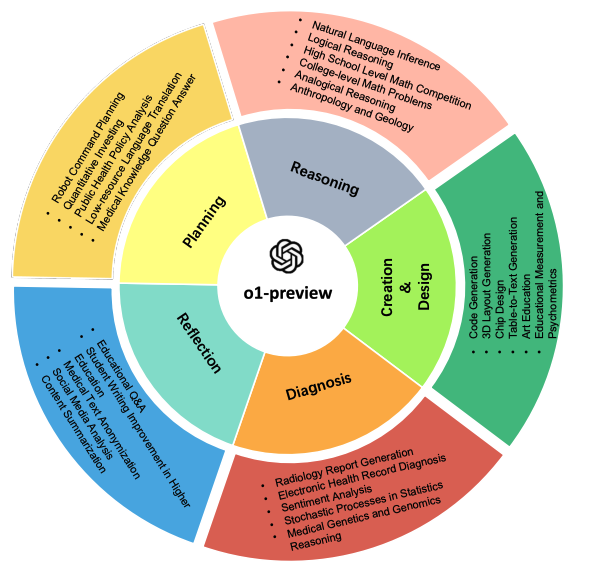

a comprehensive evaluation of o1-preview across many tasks and domains.

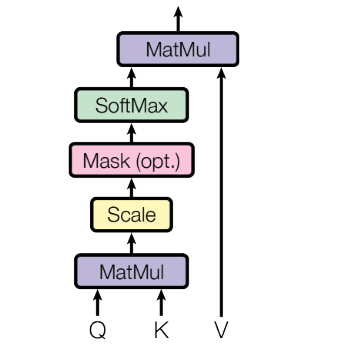

a method of computing a token representation that includes the context of surrounding tokens.