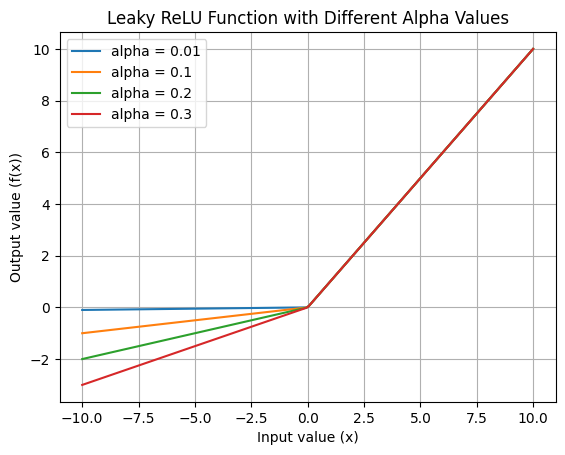

Leaky ReLU

Leaky ReLU (Rectified Linear Unit) is a modification of the ReLU activation function, which outputs a small value for negative numbers instead of 0.

The small value is generated by multiplying x with a hardcoded value like 0.1.

In code:

import numpy as np

def leaky_relu(x, alpha=0.1):

return np.where(x > 0, x, alpha * x)

It's commonly used for training generative adversarial networks or any networks with sparse gradients. By introducing a non-zero gradient for negative values, Leaky ReLU allows the model to learn from data that standard ReLU might disregard, but at the cost of adding a hyperparameter. At Leaky ReLU is simply ReLU.