Softmax Activation Function

The Softmax Activation Function converts a vector of numbers into a vector of probabilities that sum to 1. It's applied to a model's outputs (or Logits) in Multi-class Classification.

It is the multi-class extension of the Sigmoid Activation Function.

The equation is:

The intuition for it is that is always positive and increases fast, amplifying more significant numbers. Therefore, it tends to find a single result and is less useful for problems where you are unsure if inputs will always contain a label. For that, use multiple binary columns with the Sigmoid Activation Function.

Howard et al. (2020) (pg. 223-227)

Code example:

import numpy as np, pandas as pd

def softmax(x):

return np.exp(x) / np.exp(x).sum()

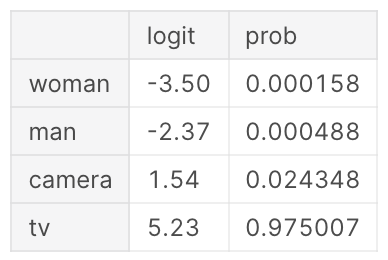

logits = np.array([-3.5, -2.37, 1.54, 5.23]) # some arbitrary numbers I made up that could have come out of a neural network

probs = softmax(logits)

pd.DataFrame({'logit': logits, 'prob': probs}, index=['woman', 'man', 'camera', 'tv'])

| logit | prob | |

|---|---|---|

| woman | -3.50 | 0.000158 |

| man | -2.37 | 0.000488 |

| camera | 1.54 | 0.024348 |

| tv | 5.23 | 0.975007 |

Softmax is part of the Categorical Cross-Entropy Loss, applied before passing results to Negative Log-Likelihood function.

References

Jeremy Howard, Sylvain Gugger, and Soumith Chintala. Deep Learning for Coders with Fastai and PyTorch: AI Applications without a PhD. O'Reilly Media, Inc., Sebastopol, California, 2020. ISBN 978-1-4920-4552-6. ↩