Information Entropy

Entropy is a measure of uncertainty of a random variable's possible outcomes.

It's highest when there are many equally likely outcomes. As you introduce more predictability (one of the possible values of a variable has a higher probability), Entropy decreases.

It measures how many "questions" on average you need to guess a value from the distribution. Since you'd start by asking the question that is most likely to get the correct answer, distributions with low Entropy would require smaller message sizes on average to send.

The entropy of a variable from distribution p is expressed as: H=∑ni=1pi×log2(1p)

The expression is commonly inverted and rewritten like this: H=−∑ni=1pi×log2(pi)

When using log base 2, the unit of Entropy is a bit (a yes or no question).

In code:

import numpy as np

def entropy(dist):

return -np.sum(np.array(dist) * np.log2(dist))

When the variable has a 50/50 distribution, you will always need to ask one question to find the answer:

dist = [1/2.]*2

dist

[0.5, 0.5]

entropy(dist)

1.0

When you have only one possible outcome, you don't need to ask any questions:

dist = [1.]

dist

[1.0]

entropy(dist)

-0.0

When you have a lot of equally likely possibilities, you have to ask a lot of questions and entropy is high:

dist = [1/100.]*100

dist[:5]

[0.01, 0.01, 0.01, 0.01, 0.01]

entropy(dist)

6.643856189774724

When you have one really likely possiblity, you only need to ask a question when the answer is the unlikely case:

dist = [99/100] + [1/100.]

dist

[0.99, 0.01]

entropy(dist)

0.08079313589591118

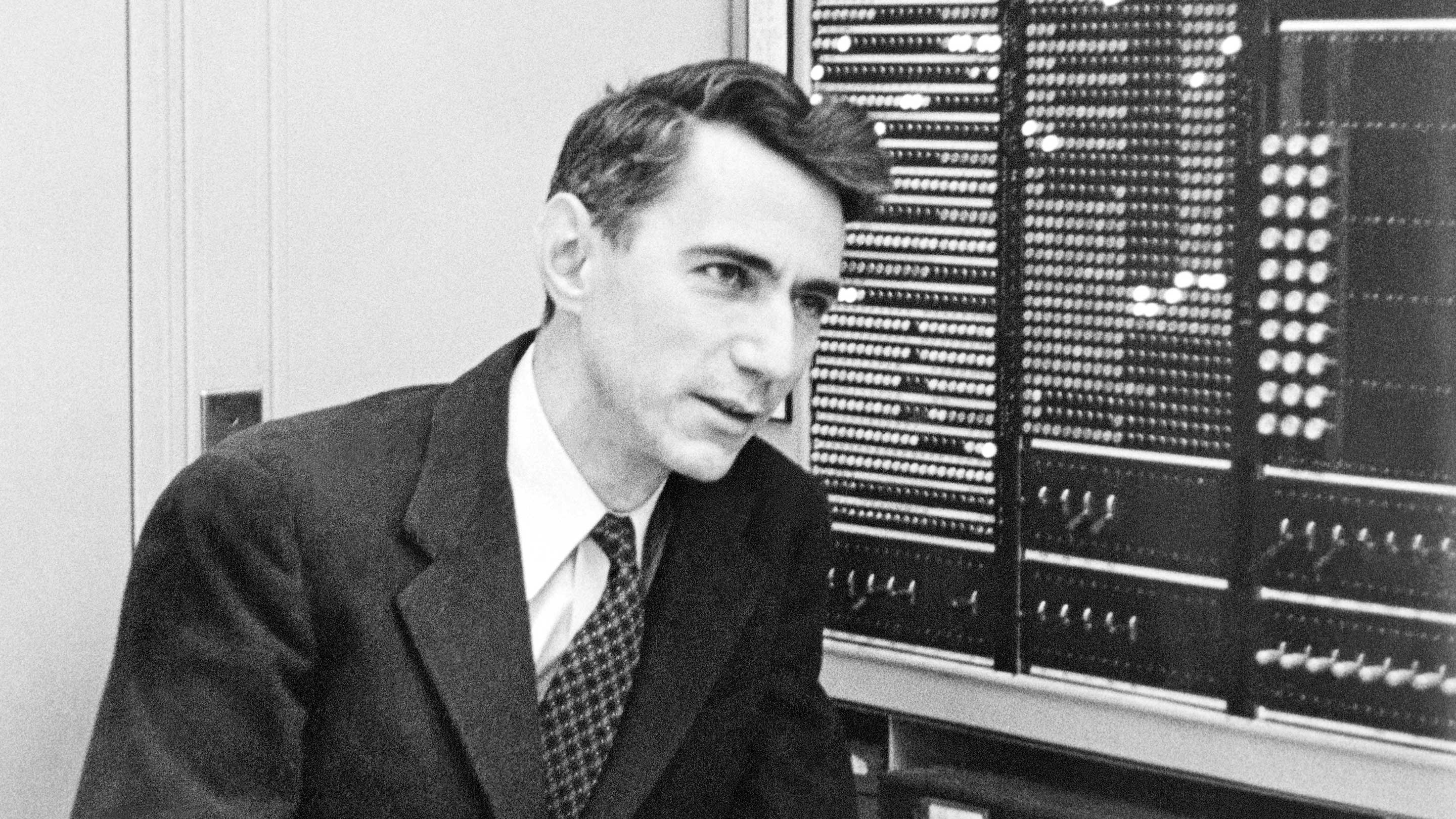

Claude Shannon borrowed the term Entropy from thermodynamics as part of his theory of communication.

[@khanacademylabsInformationEntropyJourney]

Cover from How Claude Shannon Invented the Future.