Basis Vectors

The set of Vectors that defines space is called the basis.

We refer to these vectors as basis vectors.

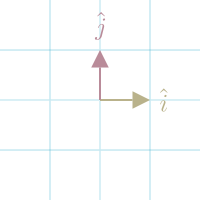

In 2d space, basis vectors are commonly defined as and .

These particular vectors are called the standard basis vectors. We can think of them as 1 in the direction of X and 1 in the direction of Y.

We can think of all other vectors in the space as Linear Combinations of the basis vectors.

For example, if I have vector , we can treat each component as scalar (see Vector Scaling) for the basis vectors: .

We can choose any set of vectors as the basis vectors for space, giving us entirely new coordinate systems. However, they must meet the following criteria:

- They're linear independent. That means you cannot get one Vector by just scaling the other.

- They span the space. That means, by taking a linear combination of the two scaled vectors, you can return any vector.

Basis vectors don't have to be orthogonal to each other, but transformations become more challenging with a non-orthogonal basis.

[@3blue1brownVectorsChapter2Essence2016] [@dyeMathematicsMachineLearning]