Dot Product

A dot product is an operation between 2 vectors with equal dimensions that returns a number.

The operation is to multiply each element in the first Vector by the corresponding component of the 2nd Vector, then take the sum of the results.

a=[a1...aN], b=[b1...bN]

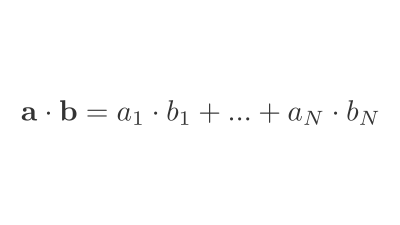

a⋅b=a1⋅b1+...+aN⋅bN

Order doesn't matter: a⋅b=b⋅a, in other words, it's Commutative.

The dot product is helpful because it tells you about the angle between 2 vectors.

If the vectors are perpendicular to each other, their dot product is 0.

[10]⋅[01]=1⋅0+0⋅1=0

If the vectors are facing in the opposite direction, the dot product is negative.

[10]⋅[−10]=−1⋅1+0⋅0=−1

We can find the angle in Radians between any 2 vectors if we first know the formula for the dot product:

a⋅b=∣a∣∣b∣cosθ

That's the "length of a" times the "length of b" times "the Cosine (cos) of the angle (θ) between them".

The cosine of an angle is a continuous number between -1 and 1 where:

- cos(180°)=−1

- cos(90°)=0°

- cos(0)=1

cos(90°)=0 explains why the dot product between perpedicular vectors is 0: ∣a∣∣b∣0=0

So, to find the angle, we can rearrange to put θ on the left-hand side: θ=cos−1(a⋅b∣a∣∣b∣).

If we know a and b are unit vectors, their lengths will equal 1. So the expression is simply: θ=cos−1(a⋅b).

We can normalise any vector to convert it into a unit vector by dividing each component by its length: a=a∣a∣.

We use the dot product throughout game development. For example, it can tell us whether a player turns by comparing their directional vector across frames. We can use it to know whether a player is facing an enemy and so on.

We also use the dot product throughout data science.

We use them in Recommendation Engines.

For example, we can find a vector for each user that represents their movie preferences. One column could describe how much they like scary movies, another for how much they like comedy movies, and so on.

Then for each item, we can create a vector that represents its characteristics. For example, we have a vector with each column describing how scary it is, how funny it is, and so on.

Then, we can take the dot product between a user and each item to determine how likely the user is to enjoy it. The further from 1 each item is, the less likely a user is to like it.

In Machine Learning, we can train a model on a dataset of preference information, often a dataset of user ratings, to learn these vectors. In this context, the vectors are referred to as Embeddings.

Simple Python example:

def dot_product(a, b):

return sum(a*b for a, b in zip(a, b))

A = [1, 2, 3]

B = [4, 5, 6]

dot_product(A, B)

32

Numpy example:

import numpy as np

np.dot(A, B)

32