Week 3 - Matrices in Linear Algebra: Objects that operate on Vectors

Introduction to matrices

Matrices, vectors, and solving simultaneous equation problems

- Matrices are objects that "rotate and stretch" vectors.

-

Given this simultaneous equation:

-

We can express as a matrix product:

-

-

A matrix product multiplies each row in A by each column in B (see Matrix Multiplication

- So we could rephrase the question as which vector transforms to give you the answer?

-

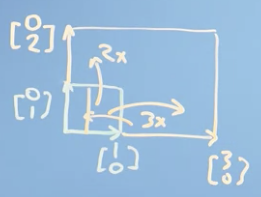

What happens when you multiply the matrix by the Basis Vectors:

It takes the basis vector and moves it to another place:

So the matrix "moves" the basis vectors.

We can think of a matrix as a function that operates on input vectors and gives us new output vectors.

-

Why is it called "Linear algebra"?

- Linear because it takes input values and multiplies them by constants.

- Algebra because it's a notation for describing mathematical objects

- "So linear algebra is a mathematical system for manipulating vectors in the spaces described by vectors."

- The "heart of linear algebra": the connection between simultaneous equations and how matrices transform vectors.

Matrices in linear algebra: operating on vectors

How matrices transform space

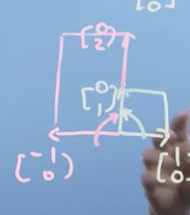

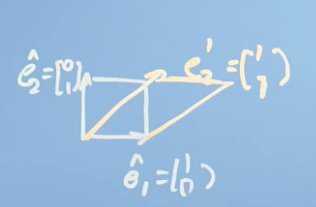

- We know that we can make any vector out of a sum of scaled versions of and

- A consequence of the scalar addition and multiplication rules for vectors, we know that the grid lines of our space don't change.

- If we have matrix and a matrix and a result with relationship:

- If we multiply by a number , then apply to , we get the result by n:

- If we multiple by the vector , we get :

-

If we think of and as the original basis vectors:

-

An example:

- Given this expression:

- We can rewrite as:

- Which is the same as:

- Simplified to:

- Which we can simplify to:

- "We can think of a matrix multiplication as just being the multiplication of the vector sum of the transformed basis vectors."

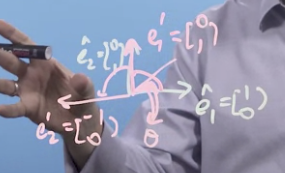

Types of matrix transformation

-

Identity Matrix (00:00-00:53)

-

A matrix that doesn't change any vector/matrix it multiplies. Like 1 in scalar math.

-

-

A scaling matrix: a scaled-up identity matrix that can scale a vector.

-

If you have a negative number for one of the axes, you could flip a vector.

-

If you have negative numbers in each of the diagonal positions, you invert the vector.

-

You can switch the axes in a vector with this matrix:

-

You can perform a vertical mirror of a vector with the matrix

-

You can shear a vector with this matrix

-

You can rotate a vector with this matrix

-

In general, the matrix for a rotation in 2d is: where describes the angle between vectors:

-

We store a digital image as a collection of colored pixels at their particular coordinates on a grid. If we apply a matrix transformation to the coordinates of each pixel in an image, we transform the picture as a whole.

Composition or combination of matrix transforms

- You can make a shape change for a vector out of any combination of rotations, shears, structures, and inverses.

-

I can apply to then to that result:

- Alternatively, you can first apply to to get the same result.

- Note that applied to isn't the same as to : the order matters.

- Therefore, Matrix multiplication isn't commutative.

- Matrix multiplication is associative:

Matrix inverses

Solving the apples and bananas problem: Gaussian Elimination

-

Revisit the Apples and Bananas problem

That's a matrix multiplied by a vector.

We can call the matrix , vector and output :

-

Inverse Matrix (00:59-02:04)

- Can we find another matrix that, when multiplied by A, gives us the identity matrix?

- We consider the "inverse" of since it reverses A and gives you the identity matrix.

- We can then add the inverse to both sides of the expression: .

- Since we know that is simply the identity matrix, we can simplify:

- So, if we can find the inverse of , we can solve the apples and bananas problem.

- Can we find another matrix that, when multiplied by A, gives us the identity matrix?

-

Solving matrix problems with Elimination and Back Substitution (02:15-08:00)

-

We can also solve the apples / bananas problem with just substitution.

We can take row 1 off row 2 and 3.

We can multiply row c by -1, which gives us the value of c: .

We know we have what's called a Triangular Matrix, which is a matrix where everything below the "body diagonal" is 0. We have reduced the matrix to Row Echelon Form.

We can take c from each of the rows.

Take c from the first row and 2nd row.

Now we know that . We can remove b from the first row.

-

So we've first done Elimination to get to triangular form.

- Then do Back Substitution to get a solution to the problem.

- This is one of the most computationally efficient ways to solve the problem.

- However, we have solved the problem, but we haven't solved it in a general way.

-

Going from Gaussian Elimination to finding the inverse matrix

-

Using Elimination to find the Inverse Matrix (00:00-07:26)

-

Here, we have a 3x3 matrix multiplied by its inverse , which equals the identity matrix.

-

We can start by just solving the first column of the inverse matrix:

Or do it all at once.

-

We can take the first row off the 2nd and third row, and the same from the identity matrix

Then multiply the last row by -1 to put the left matrix in triangular form.

Can substitute the 3rd row back into the other rows. Take 1x of the 3rd row off the 2nd and 3x of the 3rd row of the 1st.

Then take the 2nd row off the first.

So now we have an inverse of A!

-

-

There are more computationally efficient ways to do this. In practice, you'll call the solver function of your computer program. In Numpy, it's:

numpy.linalg.inv(A)which just callsnumpy.linalg.solve(A,I)

Special matrices and Coding up some matrix operations

Determinates and inverses

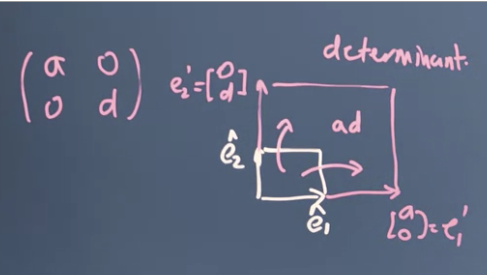

- Matrix Determinate (00:00-05:36)

- A matrix like this scales space: by a factor of .

-

is called the "determinate" of the transformation matrix.

-

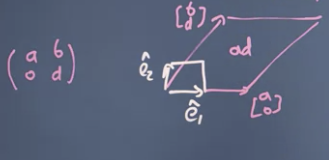

If you have matrix you create a parallelogram, but the area is still

-

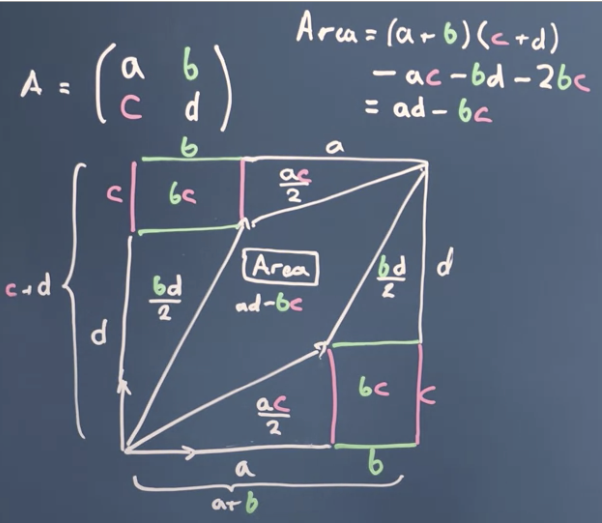

If you have a general matrix , the area creating by transforming the basis vectors is

-

We denote finding the determinate as .

-

A standard method for finding the inverse of a matrix is to flip the terms on the leading diagonal and to flip the terms on the other diagonal, then multiplying by 1 / determinate:

-

Knowing how to find the determinate in the general case is generally not a valuable skill. We can ask our computer to do it: .

- When a matrix doesn't have a Matrix Inverse (05:38-09:19)

- Consider this matrix:

- It transforms and to be on the same line.

- The determinate of A is 0:

- If you had a 3x3 matrix, where one of the Basis Vectors was just a multiple of the other 2, ie it isn't linearly independent, the new space would be a plane, which also has a determinate of 0.

-

Consider another matrix. This one doesn't describe a new 3d space. It collapses into a 2d space.

- row 3 = row 1 + row 2

- col 3 = 2 col 1 + col 2

When you try to solve, you don't have enough information. is true, but any number of solutions would work for that.

So, where the Basis Vectors that describe the matrix aren't linear independent, which means the determinate is 0, you cannot find the inverse matrix.

-

Another way to think of inverse matrix is something that undoes a transformation and returns the original matrix.