AI Meets the Classroom: When Does ChatGPT Harm Learning?

My notes from the paper AI Meets the Classroom: When Does ChatGPT Harm Learning? by Matthias Lehmann, Philipp B. Cornelius, Fabian J. Sting.

Summary

This paper covers one observational and two experimental studies on the effects of LLM access on students learning to code.

Key findings:

- Using LLMs as personal tutors by asking them for explanations improves learning outcomes

- Asking LLMs to generate solutions impairs learning. The use of copy-and-paste encourages this behaviour.

- Total beginners benefit more from LLM usage.

- LLM access increases students' perceived learning beyond their actual learning outcome, i.e. students think it's a lot more helpful than it is.

In the experimental study, they compare students' work with a number of solutions generated by ChatGPT as a proxy for the use of LLMs. They call this ChatGPT Similarity.

To calculate this, they generate 50 ChatGPT solutions and then take the maximum similarity with a student's code:

for student and question , where is the final student code, is one of the 50 ChatGPT generated solutions, , and is Damerau Levenshtein similarity.

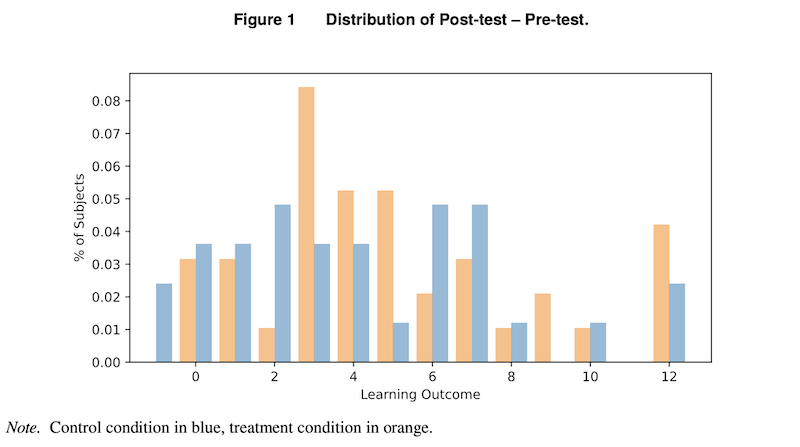

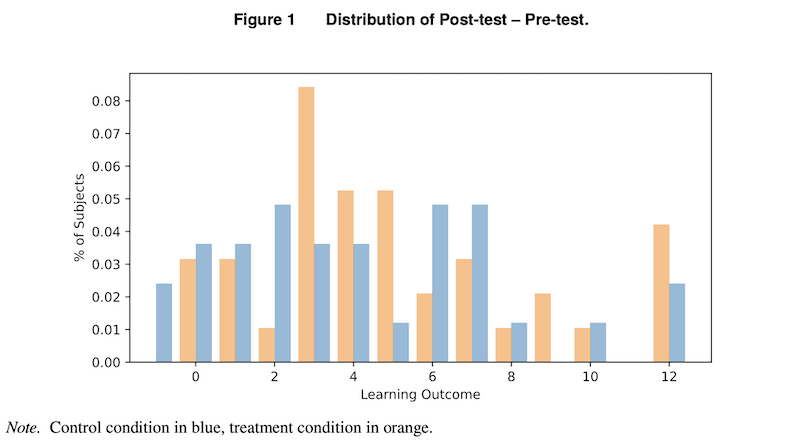

Figure 1. from the source shows the distribution of learning outcomes, measured as the difference between post-test and pre-test scores, for both the control group (blue) and the treatment group (orange). The treatment group had access to ChatGPT during the learning phase of the experiment.

The figure shows that the treatment group brought forth more high performers. Seven subjects in the treatment group surpassed a learning outcome score of 8, compared to only three in the control group.

For the remaining 85% of participants, the distribution is more condensed for the treatment group, indicating a compressing effect.

This observation is quantitatively supported by Levene's test (𝑝 = 0.069) and the Brown-Forsythe test (𝑝 = 0.094), which show that the variance of learning outcomes is significantly lower in the treatment group compared to the control group when not considering the high performers, suggesting that access to ChatGPT may have led to a more homogeneous distribution of learning outcomes, with fewer students performing poorly but also potentially fewer students achieving exceptionally high scores.