Week 4 - Matrices make linear mappings

Matrices make linear mappings

Matrices as objects that map one vector onto another; all the types of matrices

Introduction: Einstein summation convention and the Symmetry of the dot product

-

Einstein Summation Convention (00:00-04:24)

-

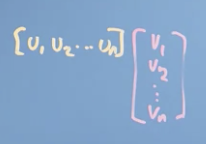

We can represent a Matrix Multiplication between matrix and matrix like this:

We can think of one of the elements in row 2, column 3 of as:

We can rewrite that as the sum of elements :

In Einstein's convention, if you have a repeated index like you can remove it alongside the Sigma notation:

The Einstein Summation Convention also describes a way to code Matrix Multiplication.

-

-

Matrix Multiplication with rectangular matrices (04:24-05:55)

-

We can multiply rectangular matrices as long as the columns in the first are equal to the rows in the 2nd.

The Einstein Summation Convention shows those operations work. As long as both matrices have the same number of s, you can do it.

-

-

Dot Product revisited using Einstein's Summation Convention (05:55-07:14)

- The dot product between vectors and in the summation convention is:

-

We can also consider a dot product a matrix product of a row vector and a column vector:

So there's some equivalence between Matrix Multiplication and the Dot Product.

-

Symmetry of the Dot Product (07:15-09:32)

-

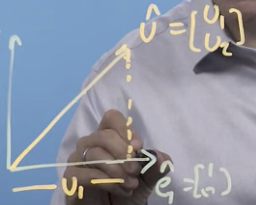

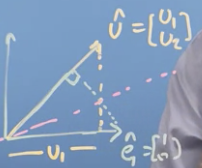

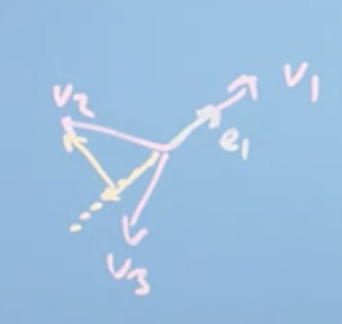

If we have unit vector and we do a projection onto one of the axis vectors: , we get a length of

-

If we then project onto , we can draw a line of Symmetry between where the two projections cross:

- The two triangles on either side are the same size, proving that the projection is the same length in either direction. So that proves the Dot Product is symmetrical and also that projection is the Dot Product.

- That explains why matrix multiplication with a vector is considered a projection onto the vectors composing the matrix (the matrix columns).

- The two triangles on either side are the same size, proving that the projection is the same length in either direction. So that proves the Dot Product is symmetrical and also that projection is the Dot Product.

-

-

Matrics transform into the new basis vector set

Matrics changing basis

-

Transforming a Vector between Basis Vectors (00:00-08:31)

- We can think of the columns of a transformation matrix, as the axes of the new basis vectors described in our coordinate system.

- Then, how do we transform a vector from one set of basis vectors to another?

-

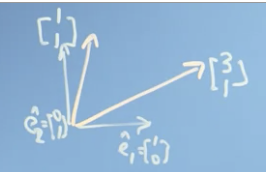

If we have 2 basis vectors that describe the world of Panda bear in at and . Noting that these vectors are describes in the normal coordinate system with basis vectors and

- In Panda's world, those basis vectors are and

- If we have a vector described in Panda's world as , we can get it in our frame, by multiplying with Panda's basis vectors:

- Which begs the question: how can we translate between Panda's world into our world?

- Using the Matrix Inverse of Panda's basis vector matrix we can get our basis in Bear's world:

-

If we use that to transform the vector in our world, we should get it in Bear's world.

- In Panda's world, those basis vectors are and

- We can think of the columns of a transformation matrix, as the axes of the new basis vectors described in our coordinate system.

-

Translate between basis vectors using projections (08:32-11:14)

- If the new basis vectors are orthogonal then we can translate between bases using only the dot product.

Doing a transformation in a changed basis

-

Doing a transformation of a Vector in a changed basis (00:00-04:13)

- How would you do a 45° rotation in Panda's basis?

-

You could first do it in a normal basis.

-

And multiply that by the vector in our basis:

-

That gives us the transformation in our basis vector. You can then multiply that by the inverse of the coordinate matrix, to get it in Panda's coordinate system.

-

In short:

Making Multiple Mappings, deciding if these are reversible

Orthogonal matrices

- Matrix Transpose (00:15-01:08)

- An operation where we interchange the rows and columns of a matrix.

- Orthonormal Basis Set (01:09-06:35)

- If you have a square matrix with vectors that are basis vectors in new space, with the condition that the vectors are orthogonal to each other and they're unit length (1)

- In math:

- When you multiple one of these matrices by their transpose, the identity matrix is returned.

- That means is a valid identity for these examples.

- A matrix composed of these is called Orthogonal Matrix.

- The transpose of these matrices is another orthogonal matrix.

- The determinant of these is 1 or -1.

- In Data Science, we want an orthonormal basis set where ever possible.

- If you have a square matrix with vectors that are basis vectors in new space, with the condition that the vectors are orthogonal to each other and they're unit length (1)

Recognising mapping matrices and applying these to data

The Gram-Schmidt process

- Gram-Schmidt Process (00:00-06:07)

- If you have vector set that span your space and are linear independent (don't have a determinate of 0) but aren't orthogonal or unit length, you can convert them into an Orthonormal Basis Set using the Gram-Schmidt Process.

- Process:

- Take the first vector in the set, and normalise so it's of unit length giving you the first basis vector:

-

We can think of as having a component in the direction of and a component that's perpendicular.

- We can find the component in the direction of by finding the vector projection of onto :

- To get as a vector we multiply by (noting that it's already of unit length):

- We know that is equal to that + the perpendicular component:

- We can rearrange the expression to find :

- If we normalise : the result is

- Now to find , which we know isn't a linear combination of and :

- We can project is onto the plane of and , which will result in a vector in the plane composed of and s.

- We can then find the components of that aren't made up of and :

- If we normalise , we get : we have another unit vector that's normal to the plane.

- We can keep doing this through all until we have basis vectors that span the space.

- We can find the component in the direction of by finding the vector projection of onto :

Example: Reflecting in a plane

- Example: performing a rotation on a vector with an unfamilar plane.

- Create Orthogonal Matrix plane with Gram-Schmidt Process.

- Challenge: performing a rotation on a vector with an unfamiliar plane.

- You know 2 vectors in the space: and

- You have a 3rd vector out of the mirror's plane:

- First part, find basis vectors:

- Find : normalise to find :

- Then , starts with : then normalise that.

- Lastly, : is the normalised:

- Result is a transformation matrix described by the basis refactors:

- We can rotate a vector by 45° in a single plane, we can flip only the third column vector:

- Now, we need to use this to convert a vector and want to apply to a transformation matrix but in a different space to make:

- Going from to is hard.

-

But if you first convert into the basis, then apply transformation and convert back into , it's easy:

- Because is orthonormal, we know the transpose is the inverse.