Matrix Multiplication

Matrix multiplication is a mathematical operation between 2 matrices that returns a matrix.

For each row in the first matrix, take the Dot Productf …

Matrix multiplication is a mathematical operation between 2 matrices that returns a matrix.

For each row in the first matrix, take the Dot Productf …

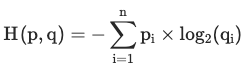

Binary Cross-Entropy (BCE), also known as log loss, is a loss function used in binary or multi-label machine learning training.

It's nearly identical to Negative …

Categorical Cross-Entropy Loss Function, also known as Softmax Loss, is a Loss Function used in multiclass classification model training. It applies the Softmax Function to …

Cross-entropy measures the average number of bits required to identify an event if you had a coding scheme optimised for one probability distribution

Entropy is a measure of uncertainty of a random variable's possible outcomes.

It's highest when there are many equally likely outcomes. As you introduce more …

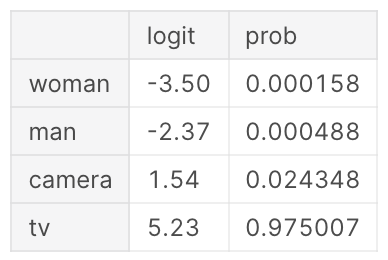

The Softmax Activation Function converts a vector of numbers into a vector of probabilities that sum to 1. It's applied to a model's outputs (or …

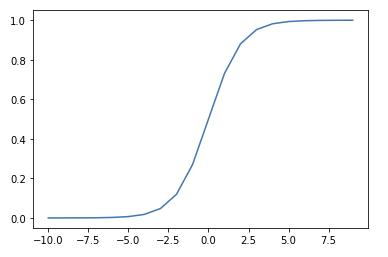

The Sigmoid function, also known as the Logistic Function, squeezes numbers into a probability-like range between 0 and 1.1 Used in Binary Classification model …