Articles in the permanent category

-

-

-

-

Predicate Logic

An extension of propositional logic that involves variables and quantifiers.

-

-

-

-

-

-

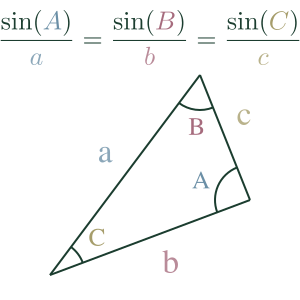

Law of Sines

Tells us the ratio between the sine of an angle and the side opposite it will be constant for all angles in a triangle