Roblox CFrame

In Roblox, a CFrame (coordinate frame) is an object that encodes position and rotation in 3D space.

In Roblox, a CFrame (coordinate frame) is an object that encodes position and rotation in 3D space.

When you multiply a matrix by the Identity Matrix , you get the original matrix back.

Notes from Coursera's Mathematics for Machine Learning: Linear Algebra by Imperial College London

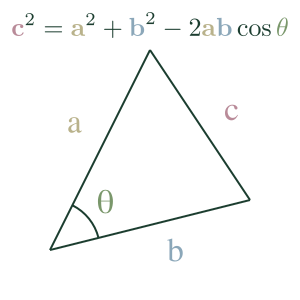

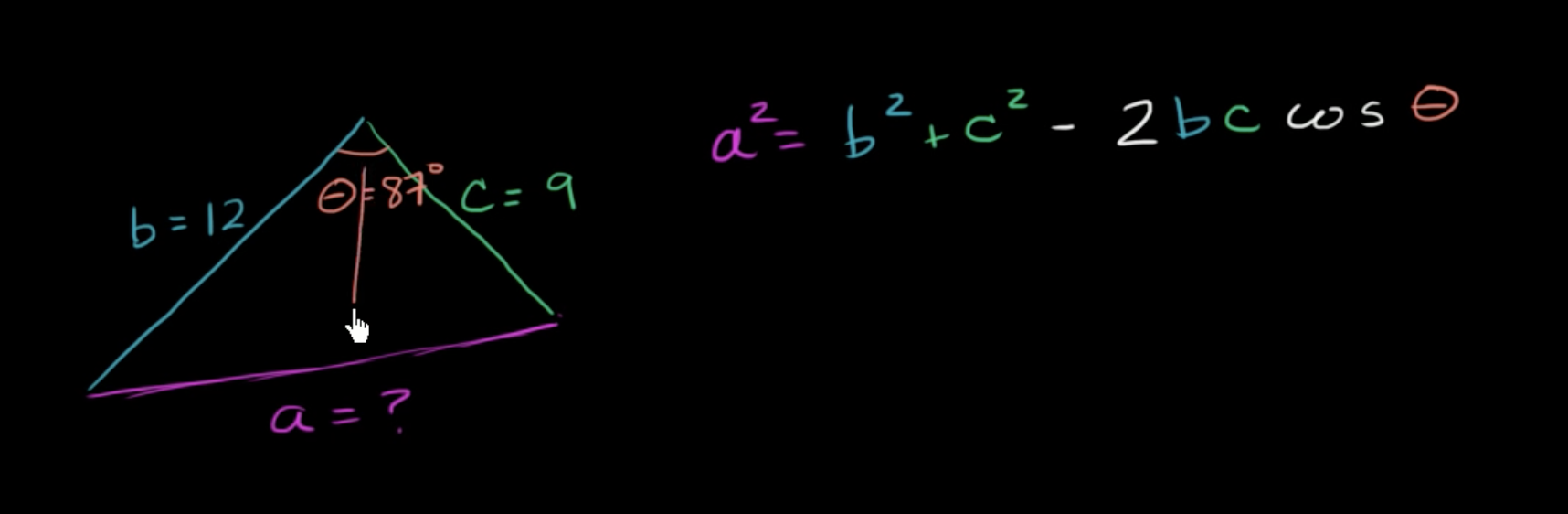

The Law of Cosines expresses the relationship between the length of a triangle's sides and one of its angles.

Notes from the Khan Academy video series on the Law of Cosines

Notes from the Deep Learning for Coders (2020) video series by Jeremy Howard and Sylvain Gugger (fast.ai)

Matrix multiplication is a mathematical operation between 2 matrices that returns a matrix.

For each row in the first matrix, take the Dot Productf …