Idempotent Data Pipelines

Idempotence is a key property of a fault tolerant and easy-to-operate data pipeline

Idempotence is a key property of a fault tolerant and easy-to-operate data pipeline

Developers must be vigilant of slow user interfaces

A short document that explains how your team documents things.

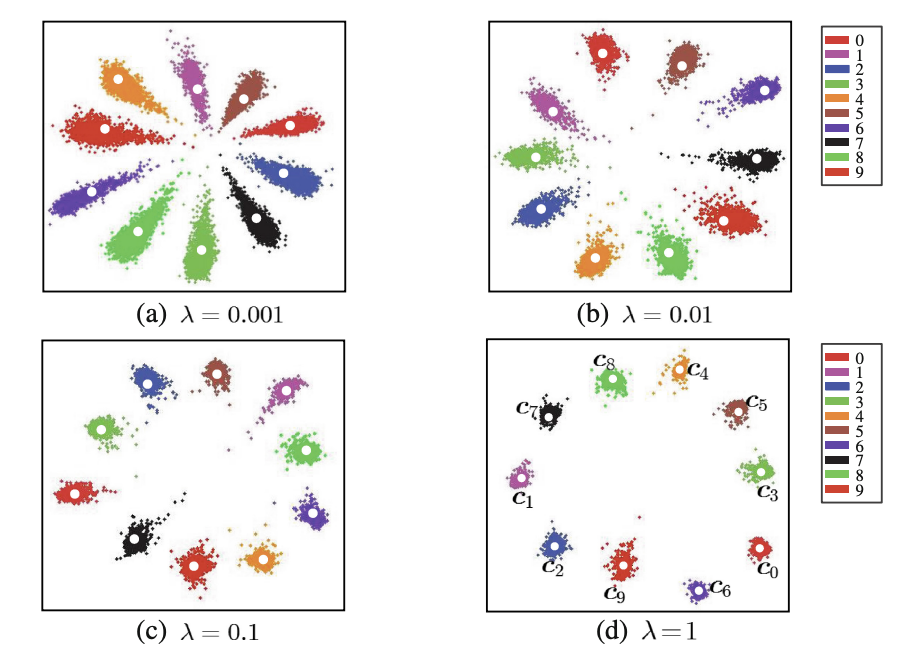

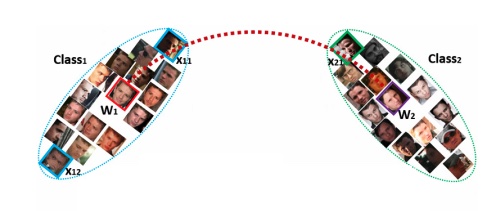

Notes from paper A Discriminative Feature Learning Approach for Deep Face Recognition by Yandong Wen, Kaipeng Zhang, Zhifeng Li, and Yu Qiao

Notes from paper ArcFace: Additive Angular Margin Loss for Deep Face Recognition by Jiankang Deng, Jia Guo, Niannan Xue, Stefanos Zafeiriou

Notes from 3Blue1Brown's video series, Essence of Linear Algebra