Bucket Sort

a distribution-based sorting algorithm that works by dividing elements into buckets

a distribution-based sorting algorithm that works by dividing elements into buckets

Routes LLM tasks to cheaper or more powerful models based on task novelty.

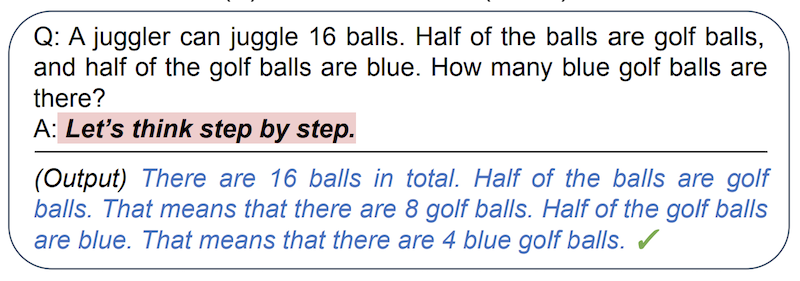

improve zero-shot prompt performance of LLMs by adding “Let’s think step by step” before each answer

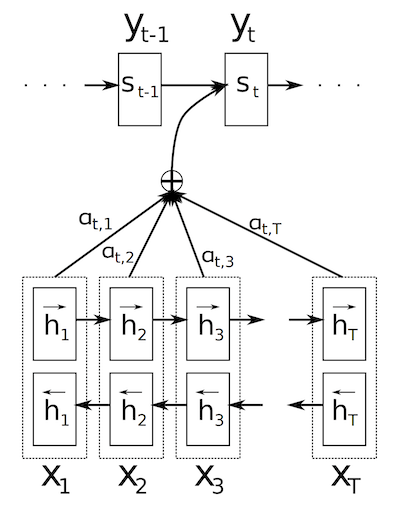

improve the Encoder/Decoder alignment with an Attention Mechanism

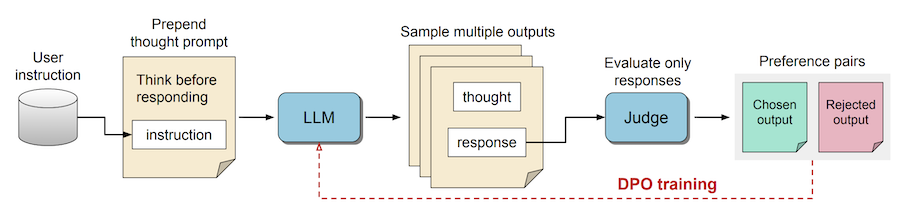

a prompting and fine-tuning method that enables LLMs to engage in a "thinking" process before generating responses

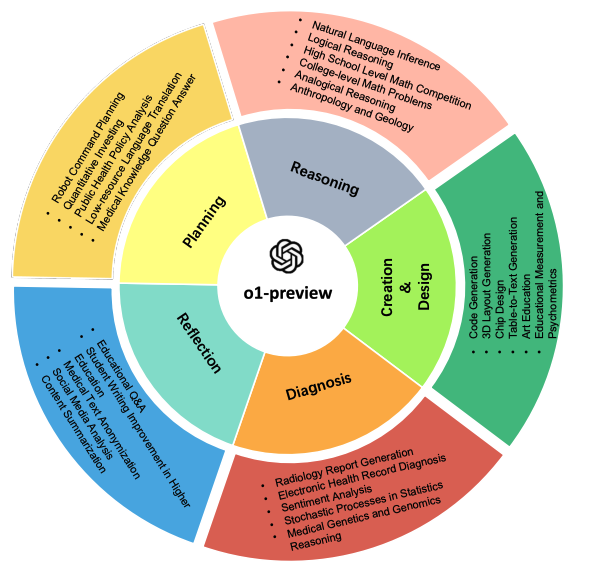

a comprehensive evaluation of o1-preview across many tasks and domains.

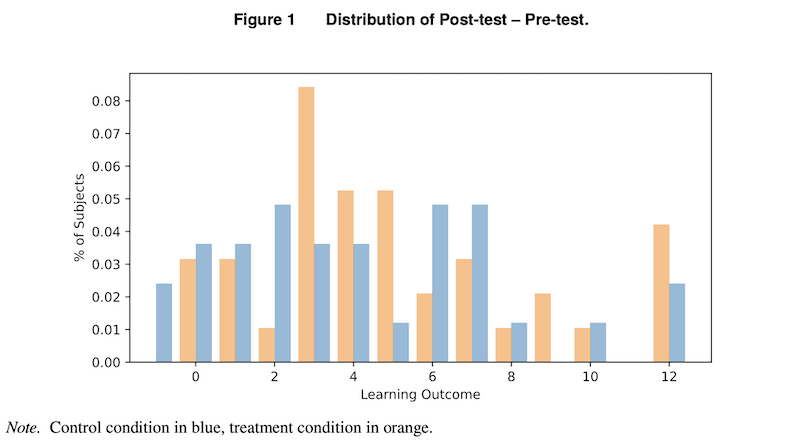

LLMs can help and also hinder learning outcomes

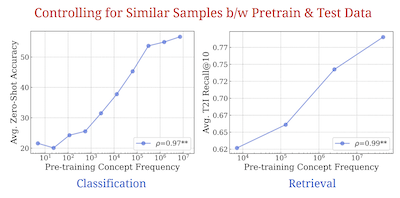

a paper that shows a model needs to see a concept exponentially more times to achieve linear improvements