This is the rendered collections of notes by me, Lex Toumbourou.

You can find the source on the GitHub project.

The notes are collecting using my interpretation of the Zettelkasten method.

More

You can find the source on the GitHub project.

The notes are collecting using my interpretation of the Zettelkasten method.

More

-

-

-

-

-

-

-

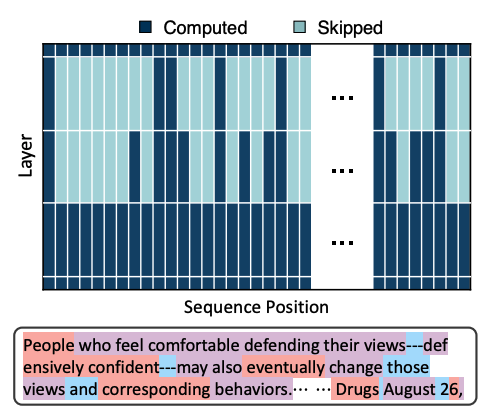

Mixture-of-Recursions: Learning Dynamic Recursive Depths for Adaptive Token-Level Computation

Optimising computation at the token-level

-

Absurdly Good Doggo Consistency with FLUX.1 Kontext

Experiments with multi-turn character consistent editing

-

-